Abstract

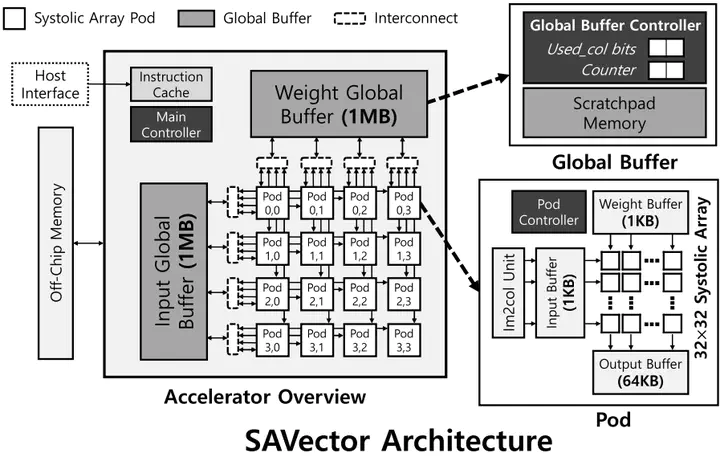

Conventional DNN inference accelerators are designed with a few (up to four) large systolic arrays. As such a scale-up architecture often suffers from low utilization, a scale-out architecture, in which a single accelerator has tens of pods and each pod has a small systolic array, has been proposed. While the scale-out architecture is promising, it still incurs increasing off-chip memory access as the pods are supposed to access the duplicate tiles of tensors. Prior work has proposed a shared buffer structure to address the problem, but those architectures suffer from performance degradation due to shared buffer access latency. We make an observation that all the pods access the same rows of input and weights within a short time window. With the observation, we propose a new inference accelerator architecture, called Vectored Systolic Arrays (SAVector). SAVector consists of a new two-level on-chip buffer architecture and a tensor tile scheduling technique. In the new buffer architecture, global buffers are shared by all the pods and they keep the rows shared by the pods. And each pod has a tiny dedicated buffer. SAVector monitors the memory access behavior and timely determines to prefetch the data and flush it. In our evaluation, SAVector exhibits a very similar off-chip memory access count to the scale-up architecture and achieves 52% energy-delay-product (EDP) reduction. Also, SAVector achieves 27% EDP reduction over prior work by mitigating performance degradation from global buffer access latency.